The Functional Logic of the Visual Information Processing Circuits

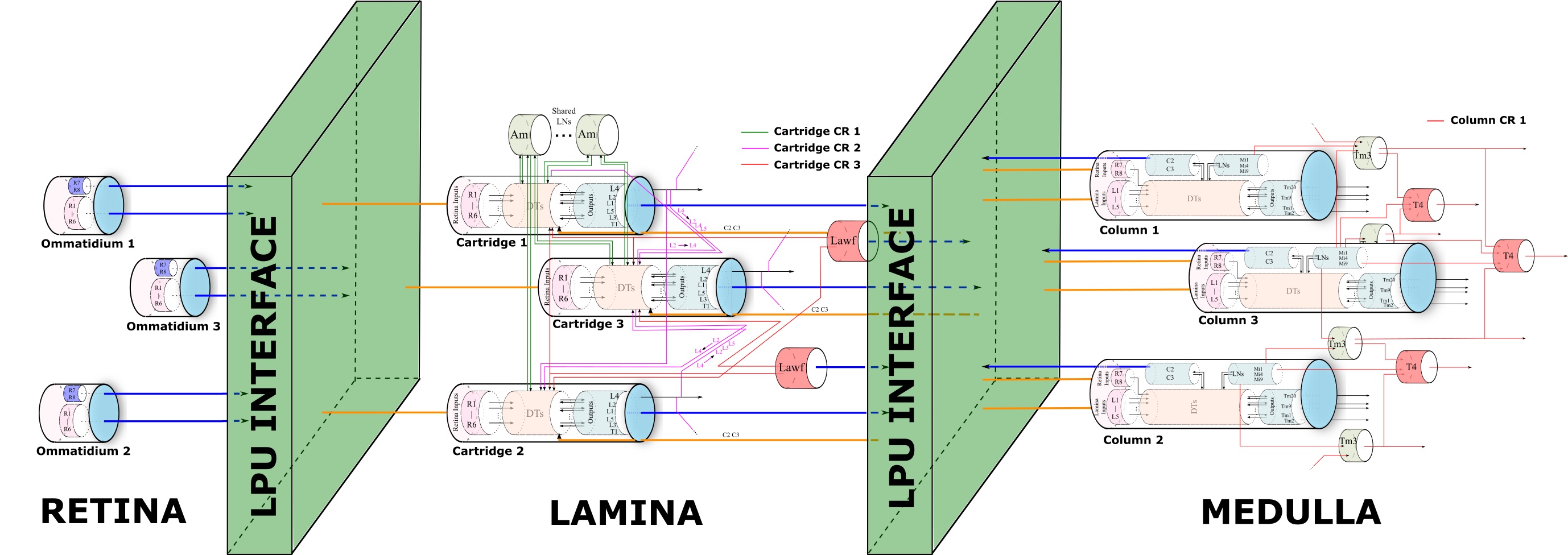

Our working model of the early vision system, consisting of the retina, lamina and medulla, mirrors the retinotopic representation of the visual field. The retinotopic organization follows the topographic arrangement of visual pathways and visual centers that reflects the spatial organization of the neurons responding to visual stimuli in the retina.

PhotoTransduction in the Retina of the Fruit Fly

The Drosophila Retina has been extensively characterized in the literature in terms of structure, connectivity and function. Our investigations provide a detailed description of the algorithms required for a full-scale parallel emulation of the fruit fly Retina including (i) the mapping of the visual field onto the photoreceptors, (ii) the phototransduction process, and (iii) the parallel processing of the visual field by the entire Retina. We also provided detailed algorithms, their implementation and their visual evaluation with moving images on GPUs.

The retina and the lamina neuropil of the early visual system of the fruit fly brain are, respectively, described by the publications below. Open source code and documentation is available as part of the FlyBrainLab Retina PhotoTransduction Library.

- Aurel A. Lazar, Nikul H. Ukani and Yiyin Zhou, The Cartridge: A Canonical Neural Circuit Abstraction of the Lamina Neuropil - Construction and Composition Rules, Neurokernel Request for Comments, Neurokernel RFC #2, January 2014.

- Aurel A. Lazar, Konstantinos Psychas, Nikul H. Ukani and Yiyin Zhou, A Parallel Processing Model of the Drosophila Retina, Neurokernel Request for Comments, Neurokernel RFC #3, August 2015.

The video above shows a visual evaluation of the fruit fly retina as a parallel information pre-processor. The light intensity of the original scene is shown on the top left. The red circle indicates the visual aperture of the eye. The logarithm of the original scene is shown on the top right. On the bottom left, “Visual Aperture” shows the projection of the area inside the red circle in the original scene onto the hemispherical visual field. “Input to R1” shows the rate of photons arriving at the R1 photoreceptors. “Delayed by 40 [ms]” shows the “Input to R1” delayed by 40 [ms]. “log(Visual Aperture)”, “log(Input)” and “log(Delayed by 40 [ms])” show the log of the plot in, respectively, “Visual Aperture”, “Input to R1” and “Delayed by 40 [ms]”. Note that “Input to R1” is the actual input to the photoreceptors. On the bottom right, the responses of photoreceptors R1-R6 are shown, respectively, in each block. All hemispheres are viewed orthogonal to their base plane. As a result, they appear to be circular.

SpatioTemporal Encoding and Retina/Lamina Contrast Gain Control

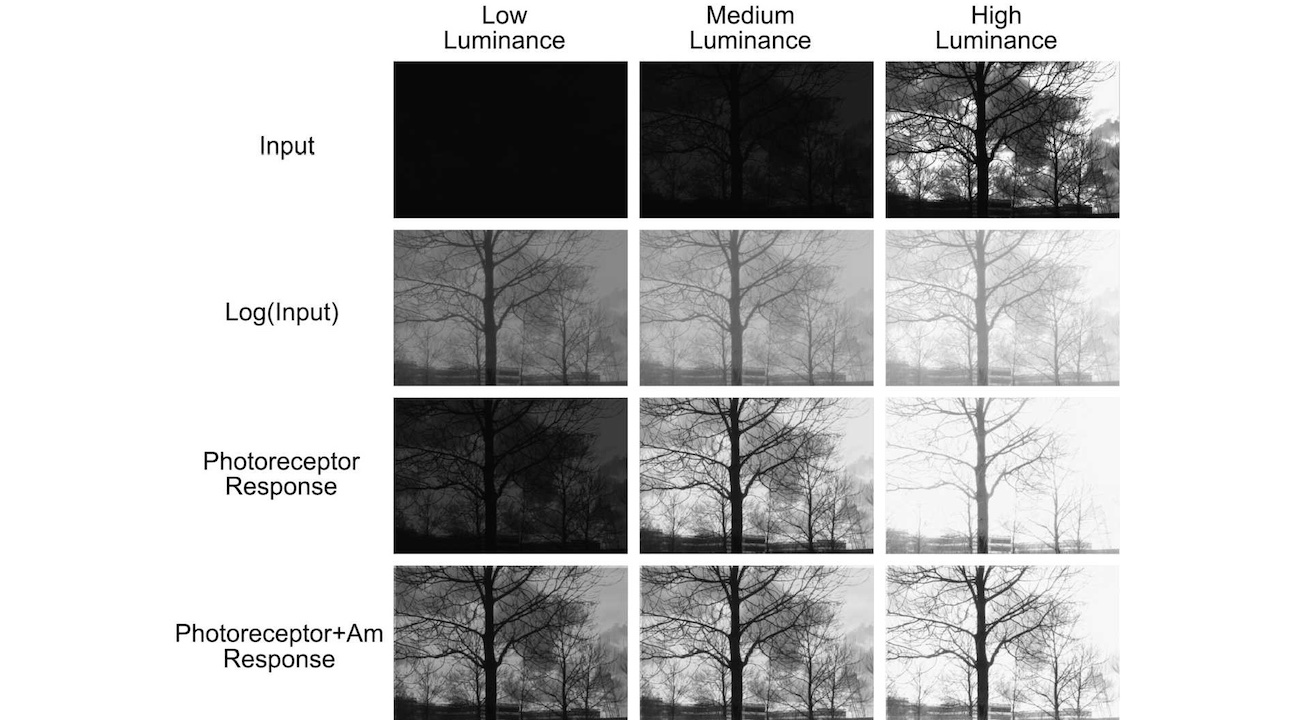

The photoreceptor/amacrine cell layer of the early vision system of the fruit fly rapidly adapts to visual stimuli whose intensity and contrast vary orders of magnitude both in space and time. We proposed a spatio-temporal divisive normalization processor (DNP) that models the transduction and the contrast gain control in the photoreceptor and amacrine cell layer of the fruit fly. It incorporates processing blocks that explicitly model the feedforward and the temporal feedback path of each photoreceptor and the spatio-temporal feedback from amacrine cells to photoreceptors. We demonstrated that with some simple choice of parameters, the DNP response maintains the contrast of the input visual field across a large range of average spatial luminance values.

- Aurel A. Lazar, Nikul H. Ukani, and Yiyin Zhou, Sparse Identification of Contrast Gain Control in the Fruit Fly Photoreceptor and Amacrine Cell Layer, The Journal of Mathematical Neuroscience, Volume 10, Number 3, Springer Open, February 2020.

The figure above depicts the steady state I/O visualization of the spatio-temporal DNP for natural images. Top Row—Input images. Second Row—Input images visualized on a logarithmic scale. Third Row—Responses without the MVP blocks modeling the spatio-temporal feedback due to amacrine cells. Fourth Row—Responses with the MVP blocks. Left Column—Stimuli presented at low luminance, Middle Column—medium luminance, Right Column—high luminance.

Motion Detection Using Local Phase Information

Spatial phase in an image is indicative of local features such as edges when considering phase congruency. We have devised a motion detection algorithm based on local phase information and constructed a fast, parallel algorithm for its real-time implementation. Our results suggest that local spatial phase information may provide an efficient alternative to perform many visual tasks in silico as well as in vivo biological vision systems.

- Aurel A. Lazar, Nikul H. Ukani and Yiyin Zhou, A Motion Detection Algorithm Using Local Phase Information, Computational Intelligence and Neuroscience, Volume 2016, January 2016.

The following video shows motion detected in the "train station video" by the phase-based motion detection algorithm, and compares the result with that of the Reichardt motion detector and the Barlow-Levick motion detector.

The top row shows motion detected by the phase-based motion detection algorithm (left), the Reichardt motion detector (middle) and the Barlow-Levick motion detector (right). Red arrows indicate detected motion and its direction. On the bottom row, the contrast of the video was artificially reduced 5 fold and the mean was reduced to 3/5 of the original. The red arrows shown are duplicated from the output of the motion detectors on the original video as on the top row. Blue arrows are the result of motion detection with reduced contrast. If motion is detected both in the original video and in the video with reduced contrast, then the corresponding arrow is shown in magenta (as a mix of blue and red).

Functional Logic of the Early Visual System

Two of the key processing steps in the motion detection pathway, the elementary motion detector and the gain control mechanism, can both be modeled with Divisive Normalization Processors.

- Aurel A. Lazar and Yiyin Zhou, Divisive Normalization Processors in the Early Visual System of the Drosophila Brain, Biological Cybernetics, Special Issue: What can Computer Vision Learn from Visual Neuroscience?, September 2023.

- Bruce Yi Bu, Aurel A. Lazar, and Yiyin Zhou, End-to-End Modeling of the Drosophila Early Vision System with Cascading Divisive Normalization Processors, Neuroscience 2024, Society for Neuroscience, October 5 - 9, 2024, Chicago, IL.

- Shashwat Shukla, Yiyin Zhou, and Aurel A. Lazar, Modeling Small Object Detection of Drosophila Lobula Circuits with Divisive Normalization Processors, Neuroscience 2024, Society for Neuroscience, October 5 - 9, 2024, Chicago, IL.

The Bionet Group is supported by grants from

|

|

|

|